My olduse.net exhibit has now finished replaying the first year of historical Usenet posts from 30 years ago, in real time.

That was only twelve thousand messages, probably less than many people get on Facebook in a year.. but if you read along this year, you probably have a much better feel for what early Usenet was like. If you didn't, it's not too early to start, 9 years of Usenet's flowering lie ahead.

I don't know how many people followed along. I read .. not every message, but a large fraction of them. I see 40 or so unique NNTP connections per day, and some seem to stick around and read for quite a while, so I'm guessing there might be a few hundred people following on a weekly basis.

If you're not one of them, and don't read our olduse.net blog, here are a few of the year's highlights:

- After announcing the exhibit

it hit all the big tech sites like Hacker News and Slashdot, and

Boing Boing. A quarter million people loaded the front page. For a while

every one of those involved a login to my server to run

tin. I have some really amusing who listings. Every post available initially was read an average of 100 times, which was probably more than they were read the first go around. - Beautiful ascii art usenet maps were posted as the network mushroomed; I collected them all. In a time-delayed collaboration with Mark, I produced a more modern version.

- We watched sites struggle with the growth of usenet, and followed along as B-news was developed and deployed.

- In 2011, Dennis Richie passed. We still enjoy his wit and insight on olduse.net.

- We enjoyed the first posts of things like The Hacker's Dictionary and DEC Wars.

- Some source code posted to usenet still compiles. I haven't found anything to add to Debian, yet. :)

I will probably be expiring the first year's messages before too long, to have a more uncluttered view. So if you wanted to read them, hurry up!

Nicaragua here I come! I plan to be at DebCamp for a few days too, taking advantage of some time on internet-better-than-dialup to work on fixing the tasks on the CDs. Also will be available for one-on-one sessions on debhelper, debconf, debian-installer development, pristine-tar, or git-annex. Give me a yell if you'd like to spend some time learning about any of these.

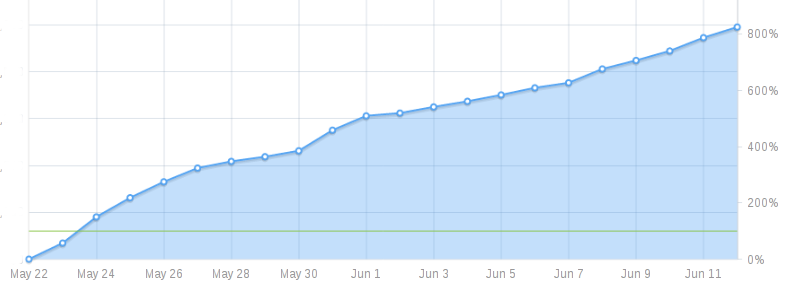

Also, my git-annex Kickstarter ends in 3 days. It has reached heights that will fund me, at a modest rate, for a full year of development!

(I'm also about to start renting my house for the first time. Whee!)

I have an interesting problem: How do I shoehorn "hired by The Internet for a full year to work on Free Software" into my resume?

Yes, the git-annex Kickstarter went well. :) I had asked for enough to get by for three months. Multiple people thought I should instead work on it for a full year and really do the idea justice. Jason Scott was especially enthusiastic about this idea. So I added new goals and eventually it got there.

Don Marti thinks the success of my Kickstarter validates crowdfunding for Free Software. Hard to say; this is not the first Free Software to be funded on Kickstarter. Remember Diaspora?

Here's what I think worked to make this a success:

I have a pretty good reach with this blog. I reached my original goal in the first 24 hours, and during that time, probably 75% of contributions were from people I know, people who use git-annex already, or people who probably read this blog. Oh, and these contributors were amazingly generous.

I had a small, realistic, easily acheivable goal. This ensured my project was quickly visible in "successful projects" on Kickstarter, and stayed visible. If I had asked for a year up front, I might not have fared as well. It also led to my project being a "Staff Pick" for a week on Kickstarter, which exposed it to a much wider audience. In the end, nearly half my funding came from people who stumbled over the project on Kickstarter.

The git-annex assistant is an easy idea to grasp, at varying levels of technical expertise. It can be explained by analogy to DropBox, or as something on top of git, or as an approach to avoid to cloud vendor lockin. Most of my previous work would be much harder to explain to a broad audience in a Kickstarter. But this still appeals to very technical audiences too. I hit a sweet spot here.

I'm enhancing software I've already written. This made my Kickstarter a lot less vaporware than some other software projects on Kickstarter. I even had a branch in git where I'd made sure I could pull off the basic idea of tying git-annex and inotify together.

I put in a lot of time on the Kickstarter side. My 3 minute video, amuaturish as it is, took 3 solid days work to put together. (And 14 thousand people watched it... eep!) I added new and better rewards, posted fairly frequent updates, answered numerous questions, etc.

I managed to have at least some Kickstarter rewards that are connected to the project is relevant ways. This is a hard part of Kickstarter for Free Software; just giving backers a copy of the software is not an incentive for most of them. A credits file mention pulled in a massive 50% of all backers, but they were mostly causual backers. On the other end, 30% of funds came from USB keychains, which will be a high-quality reward and has a real use case with git-annex.

The surprising, but gratifying part of the rewards was that 30% of funds came from rewards that were essentially "participate in this free software project" -- ie, "help set my goals" and "beta tester". It's cool to see people see real value in participating in Free Software.

I was flexible when users asked for more. I only hope I can deliver on the Android port. Its gonna be a real challange. I even eventually agreed to spend a month trying to port it to Windows. (I refused to develop an IOS port even though it could have probably increased my funding; Steve's controlling ghost and I don't get along.)

It seemed to help the slope of the graph when I started actually working on the project, while the Kickstarter was still ongoing. I'd reached my original goal, so why not?

I've already put two weeks work into developing the git-annex assistant.

I'm blogging about my progress every day on its

development blog.

The first new feature, a git annex watch command that automatically

commits changes to git, will be in the next release of git-annex.

I can already tell this is going to be a year of hard work, difficult problems, and great results. Thank you for helping make it happen.

Obnam 1.0 was released during several months when I had no well-connected server to use for backups. Yesterday I installed a terabyte disk in a basement with a fiber optic network connection, so my backupless time is over.

Now, granted, I have a very multi-layered approach to backups; all my data is stored in git, most of it with dozens of copies automatically maintained, and with archival data managed by git-annex. But I still like to have a "real" backup system underneath, to catch anything else. And to back up those parts of my user's data that I have not given them tools to put into git yet...

My backup server is not my basement, so I need to securely encrypt

the backups stored there. Encrypting your offsite backups is such a good

idea that I've always been surprised at the paucity of tools to do it. I

got by with duplicity for years, but it's increasingly creaky, and the

few times I've needed to restore, it's been a terrific pain. So I'm excited

to be trying Obnam today.

So far I quite like it. The only real problem is that it can be slow, when there's a transatlantic link between the client and the server. Each file backed up requires several TCP round-trips, and the latency kills the bandwidth. Large files are still sent fast, and obnam uses little resources on either the client or server while running. And this mostly only affects the initial, full backup.

But the encryption and ease of use more than make up for this. The real

killer feature with Obnam's encryption isn't that it's industry-standard

encryption with gpg, that can be trivially enabled with a single option

(--encrypt-with=DEADBEEF). No, the great thing about it is its key

management.

I generate a new gpg key for each system I back up. This prevents systems reading each other's backups. But that means you have to backup the backup keys.. or when a system is lost, the backup would be inaccessible.

With Obnam, I can instead just grant my personal gpg key access to

the repository: obnam add-key --keyid 2512E3C7. Now both the machine's

key and my gpg key can access the data. Great system; can't revoke access,

but otherwise perfect. I liked this so much I stole the design and used

it in git-annex too. :)

I'm also pleased I can lock down .ssh/authorized_keys on my backup

server, to prevent clients running arbitrary commands. Duplicity runs

ad-hoc commands over ssh, which defeated me from ever locking it down.

Obnam can be easily locked down, like this:

command="/usr/lib/openssh/sftp-server"

This could still be improved, since clients can still read the whole filesystem with sftp. I'd like to have something like git-annex's git-annex-shell, which can limit access to only a specific repository. Hmm, if Obnam had its own server-side program like this, it could stream backup data to it using a protocol that avoids the roundtrips needed by the SFTP protocol, and fix the latency issue too. Lars, I know you've been looking for a Haskell starter project ... perhaps this is it? :)