Sinking Creek passes by my sister's place and then does something strange: It disappears under ground, passes beneath both a hill and the Clinch River, to emerge on the other side as a spring and finally flow into the river. I've always wanted to find where it does this, and today we set out to do that.

So for an hour or so we waded and scrambled down the soft-bottomed creek, through water that was often waist deep, with sometimes deeper suprises, past stands of cane, watching the banks rise up higher and higher, until we were in a 50+ foot deep gully.

There were many signs of flooding and erosion from when the underground outlet gets plugged. Lots of interesting trees suspended on their roots.

The sinkhole itself was not very impressive, and is less so here, but the landscape around it was breathtaking. These photos can't really show the scale of the place.

|

On the way back we got to play hide-n-seek from a farmer on his tractor, which added a nice adrenalin rush. He was scarier than this creek doll.

(Photos courtesy Anna Hess; her blog about it.)

So, sha-1 is looking increasingly insecure in applications where birthday attacks are possible. ("Birthday attacks" ... what a phrase ... I hope my non-technical readers stopped at "sha-1".)

Two things about that:

First, I wanted to mention that I've today released jetring 0.15,

which adds support for arbitrary hashes in the index file, and deprecates

use of sha-1, going to sha-256 by default. There is a jetring-checksum -u

utility that can be used to upgrade sha-1 hashes in existing jetring index

files.

If you're using jetring in an application where changesets are provided by third paries, then a birthday attack could be possible (though not easy?), and you should upgrade your index. debian-maintainer is a good example of such a jetring user.

Secondly, our beloved git uses sha-1, and this seems unlikely to change soon or without significant pain. So, what kinds of collision attacks would you need to watch out for when using git? Here is a real-world example I've been pondering. Is it accurate?

- Alice creates a legitimate new version of a file in the linux kernel.

- Alice uses the new 252 work to generate two variants of the file

that sha-1 the same. One is suitable for public consumption, and one

does something nasty. (Note that this is still gonna be very hard

to accomplish for peer-reviewed source code. (Maybe the best file to patch

would be one containing firmware?) Also note that the collision actually

needs to occur on the data that

git-hash-object(1)will hash for the file.) - From the two variants of the file, Alice can generate two patches, a good and a bad. The good version is sent to Linus. Note that the sha-1's of the patches will not be the same, but when applied to a git repo, both patches will generate versions of the original file that sha-1 the same, despite being different.

- Linus accepts the patch and publishes it in his git repo, and tags a new release. His repo now contains the good variant of the file.

- Alice sets up her own git repo, a clone of Linus's, and tweaks it to contain the bad version of the file.

- Alice lets the world know about her git repo, and encourages people pull from it before pulling from Linus, to save him bandwidth, or for some other plausible reason. (May seem unlikly, but people actually do this in many scenarios in the git world.)

- Bob pulls from Alice's git repo, then pulls from Linus, and then builds the kernel, from Linus's tag. Git gets the bad version of Alice's file from her repo, and its sha-1 is ok. Alice has succeeded in deploying her evil code.

Here's a different scenario..

- Say that I have commit access to the

firmware-nonfreegit repository. Let's also suppose that releases offirmware-nonfreeare built by a build server that clones the git repository, and builds from it. - I take a new firmware file, and use birthday attacks to generate two

variants of it, one good and one bad, that

git-hash-objectwill generate the same sha-1 for. - I commit the good one to git, ensuring that the commit appears to have been made by a contributor who is on vacation, not me. I push it to the master git repository, and wait for my co-developers to pull it, test it out, etc.

- When a release of

firmware-nonfreeis immenant, I ssh in and modify the object in the master git repo, replacing it with the bad version of the firmware. Since all the developers have pulled the good version already, they are unlikely to notice this change. - The build server is kicked off, clones the repository, including the bad firmware, checks the gpg signature on the release tag, and deploys my evil code.

- I ssh back in and cover my traces, changing the object back to the good version.

This seems more plausible. This sort of attack is easy to accomplish with a subversion repository, and was one of the reasons I was glad to switch to git, since its checksums and signed tags seemed to prevent this kind of mischief. So, worrying, especially if your project uses such a build server.

Update: Thanks to the commenters for helping me correct my example. (I hope!)

I have finally figured out how to make clicking on a mp3 link in firefox cause the url to be instantly passed to mpd and played. If this seems like it should be easy, think again..

Firefox makes it hard, because while it lets you configure an program to run for a mp3, if the mp3 is not streamed, it will first download the whole mp3, and then run your program, passing it the local file. That's not what we want: It's slow, and it's much easier to queue an url in mpd than a new file.

The trick to making it work is an addon called MediaPlayerConnectivity. Confusingly, this addon sometimes refers to itself as "MPC" -- not to be confused with "mpc(1)". Warning: This addon is a ugly mess that wants to do a lot of other stuff than play mp3s, so make sure to disable all that.

MediaPlayerConnectivity's preferences allows you to specify a command to run for mp3 files (but annoyingly, not ogg vorbis). This command will be passed the url to the mp3. So you need to write a little script that runs mpc add and starts the queued url playing.

My take on that is mpqueue,

which uses the mpinsert command from mpdtoys to add the url just

after the currently playing song in the playlist.

Then it uses mpc next to skip to it. This way, after whatever you clicked

on finishes playing, mpd continues with whatever was next in your playlist,

and the music never stops.

BTW, since I use a mpd proxy this is all done on whatever remote mpd the proxy is currently set to use, rather than my laptop. Clicking on a link and having the sound system in the next room switch to playing it is pretty nice. :)

Previously: My mpd setup

Last time I created a code_swarm video, I forgot to document

the whole video editing process. Let's try to record the key

stuff this time.

- Run

code_swarm(see its docs). Note that its output is best if run on a display without subpixel rendering -- so desktop, not laptop. - Now to convert the frames into a dv video. (There is a small quality loss

here.)

ffmpeg -f image2 -r 24 -i ./frames/code_swarm-%05d.png -s ntsc out.dv - Import video into kino.

- Edit video in kino.

- Sound. Needs to be in wav format. Use effects, and Dub sound in to video.

- Final encoding. Kino's exporters are not very reliable; I have it export to a raw dv, and then use xvidenc (packaged in debian-multimedia) for the encoding.

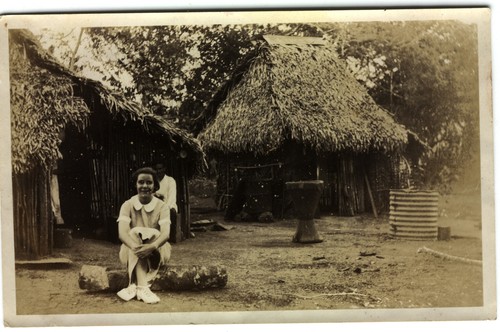

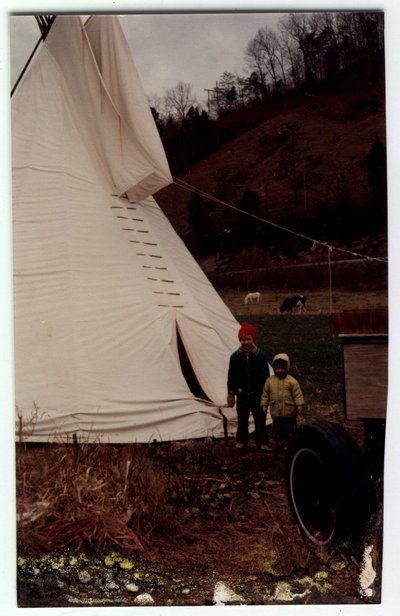

I was given a lot of old photos and scanned them in.

The most interesting are from my grandparents' time in Panama, and old family photos from as far back as 1918.

For me though, the gold mine are these pictures of our wacky life at the store and the farm. Follow the links to many more..

Some spring days just seem full to bursting with unusual experiences. I spent the night at the yurt and in just four hours today:

- Worked to protect my ethernet cables from spinning blades of doom by encasing them in pipes where my sister wants to mow.

- Just as I was getting into the creek for a dip, a hawk (or similar) flew down the creek through a tangle of trees and almost into my face. We were both pretty startled.

- Got in a splashing war with a dog. Lucy is capable of swimming out into the creek and splashing water three feet high, right at you. We had way too much fun, and now I think one of us is smarter than I'd thought, and the other, perhaps sillier.

- Got home to find a huge box from Amazon. Hmm, I hadn't ordered anything from Amazon... Double hmm, the inside was just full of packing paper... Yipes, there's a big red axe buried in here!

Thanks to Iain M from Melbourne who writes, "We have never met. I have used your software and gained insight from your blog, over the years. So thanks. Also, I have never bought someone an axe before. This is cool."

I forgot I had that in my wishlist, but now I can split wood with ease, when I run out of Amazon packaging material to burn.

I used to hate that question, particularly in high school, when showing my interlocutor the cover of whatever book I was tearing through was almost certain to end that conversation cold. Maybe that's part of why I switched to reading ebooks as soon as it was practical? Anyway, these days I'm happy to let you know what I'm reading; I just added my 1000th book on GoodReads.

The easiest way to explain GoodReads is that it's like Netflix's movie rating and recommendation system, except it can't automatically know what you've got checked out, and it doesn't really do many recommendations, sadly. But it's good about showing you what friends are reading. In other words, it's a social networking site around books. One of several, though the others I've tried seemed clunkier, or too flashy. Goodreads is fairly easy to use, though the UI could use some work in a few places. It's not been especially burdensome to note when I start and finish each book. The best part is that it can keep track of books I want to read.

Rating things on a five star system is not something I'm particularly good or consistant at, nor something I want to spend much time on, but I have painlessly rated about 500 books so far, as well as importing 500 more, unrated, from a list of books I read a decade ago. (500 others from that list failed to import.) I'd like to get a high percent of the books I've read recorded there, so I can a) get a grip on just how many books that is (guessing in the 3 thousand range?) and b) avoid accidental rereads and maybe remember some good books I've not read in a while.

Of course, as when using any service that silos my data these days, I have to worry about getting it back out. For at least the most important bit -- the list of books, dates, and ratings, GoodReads has a good data export capability, though it's a bit hard to find (hidden here) and not ameanable to automated backups. (Aside: Is anyone working on a one-stop utility that can automatically back up your data from all web silos? Needed.)

For the past six months or so, I've been in a bit of a dry spell for finding good new fiction; reading formal book review sites wasn't working for me (most reviews spoil books for me, or just turn me off to them); and ebooks cut down on that library serendipity. I think using this site is helping to break the drought for me, and I'm curious: Whatcha reading?

PS: Thanks to whoever sent me the The Design of Everyday Things (your name wasn't listed). I especially like that it's a used copy. Had that on my wishlist and been wanting to read that forever!

So, I'm considering a backup system that has as its goal to let you backup all your data from third party websites, easily.

The idea I have for its UI is you enter in the urls for all the sites you use, or feed it a bookmark file. By examining the URLs, it determines if it knows how to export data from each site, and prompts for any necessary login info. The result would be a list of sites, and their backup status. I think it's important that you be able to enter websites that the system doesn't know how to handle yet; backup of such sites will show as failed, which is accurate. It seems to almost be appropriate to have a web interface, although command-line setup and cron should also be supported.

For the actual data collection, there would be a plugin system. Some plugins could handle fairly generic things, like any site with an RSS feed. Other plugins would have site-specific logic, or would run third-party programs that already exist for specific sites. There are some subtle issues: Just because a plugin manages to suck an RSS feed or FOAF file off a site does not mean all the relevant info for that site is being backed up. More than one plugin might be able to back up parts of a site using different methods. Some methods will be much too expensive to run often.

As far as storing the backup, dumping it to a directory as files in whatever formats are provided might seem cheap, but then you can check it into git, or let your regular backup system (which you have, right?) handle it.

Thoughts, comments, prior art, cute program idea names (my idea is "?unsilo"), or am I wasting my time thinking about this?

While I've only skimmed the available protocol draft and whitepapers, I do have a couple thoughts about wave.

Firstly, I can imagine something like this supplanting not just email and instant messaging, but also web forums, blog comments, mailing lists, wikis, bug tracking systems, twitter, and probably various other forms of communication. (But not, apparently, version control systems.) While one might have an attachment to a few of those things, I think most of us would be glad to see most of them collectively die (especially twitter and web forums of course) and be replaced by a data-driven standard, with multiple clients, rather than their general ad-hoc, server-side generated nastiness. (The Project Xanadu guys would be probably be proud.)

Secondly, while Google are making all the right noises about open protocols, multiple providers, etc, and probably only plan to lock in about five nines percent of users to wave.google.com before competing implementations arrive, wave is not a fully distributed system. Spec says:

The operational transform used for concurrency control in wave is [currently] based on mutation of a shared object owned by a central master.

The wave server where the wave is created (IE that has the first operation) is considered the authoritative source of operations for that wave.

If the master server goes away, it sounds like a wave will become frozen and uneditable. There seems to be no way to change the master server after a wave is created, without ending up with an entirely different wave.

(Update: The demo, around minute 70, seems to show a private modification to a wave being made on another server -- so I may be missing a piece of the puzzle.)

The concurrency control protocol will be distributed at some point in the future

How many centralized systems have been successfully converted into distributed systems?

In a similar vein, after reading this whitepaper, I'm left unsure about whether it will be possible to respond to (edit) a wave while offline. Maybe, as clients can cache changes, but how well will conflict resolution work? It seems it would have to be done in the client, and there are some disturbing references here to clients throwing their local state away if they get out of sync with the server.